Active Inference, A Primer

Inactive inference is where you're laid on the couch trying to work out if the omens say you can get up today. Active inference is more complicated.

To stay alive, organisms want to exist within a certain metabolic range. You want your body temperature to be around 37°C, you want to have enough food to sustain your metabolism, and you want to be receiving oxygen. If you don’t do these things, you usually die.

Much of biology involves attempting to explain how organisms maintain their vitals. The dominant theory in neuroscience currently is that humans operate via a series of predictive models which help them act in their environments. Since the brain doesn’t have access to the outside world, it has to make ‘guesses’ about what is out there.

To take an example profferred by Daniel Dennett in Intuition Pumps, imagine you’re in a room with no doors or windows. On the walls of this room are thousands of buttons, with different colours, shapes, and sizes. If you press the buttons, you get feedback from a dizzying array of dials and needles.

How do you know what to press? How do you know what to do? The good news is that if you don’t figure it out, you will die.

This is analogous to what the brain is doing. It has to press buttons and adjust settings in order to keep you alive, without having any external information. All it receives is electrical data from the eyes, ears, skin, and so on.

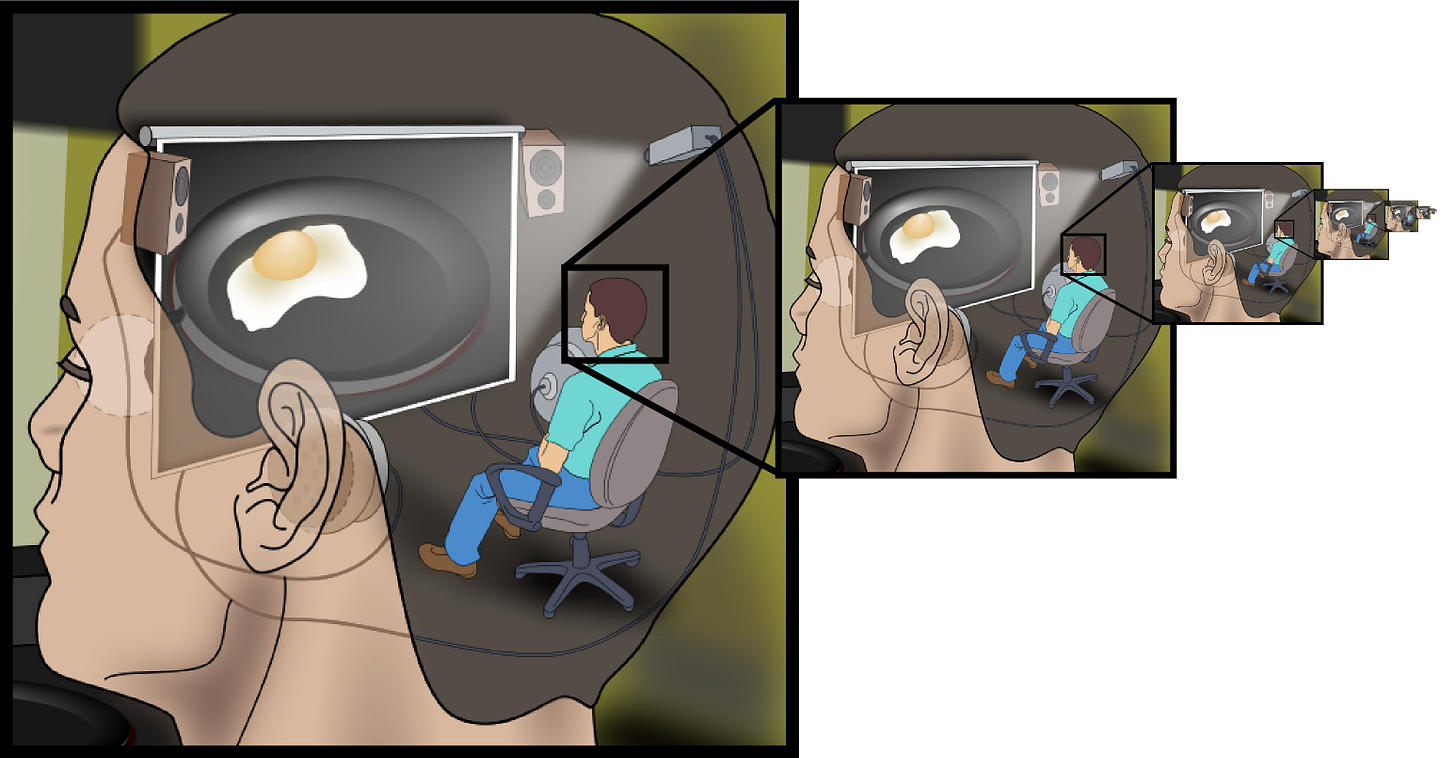

A note - try not to fixate on the idea of the little dude/dudette in your skull - this gives rise to the infinite recurrence of the homunculus problem where the question then becomes - who’s in the little dude’s head? This is eggcellently illustrated in the image below:

One dominant paradigm for explaining how the brain does this is known as the free energy principle.

Free energy is a concept borrowed from statistical physics, and is notoriously complicated to understand. I think the way to get to grips with this concept is to ignore it and then come back to it later. And no, disappointingly, I’m not ending this blog post here.

Active inference, a concept buried in the free energy principle, is simpler to follow and is useful as a model even if you don’t really follow all of the complex mathematics behind free energy (like me), so we’ll start there.

The basic idea is that your brain has a series of top-down models about how the world works. Some of these models are hardwired into you from birth, and some of them are learned from experience. These models are constantly tested against a stream of bottom-up data from the external world. So if your model says that pigs can fly, and you hang out a lot with pigs, and you never notice them flying no matter how many times you glue wings onto them, this model will be revised.

This is a form of Bayesian inference. Thomas Bayes was an 18th century Reverend who advanced statistical theory through his eponymous rule:

P(A) is the probability of A occurring, and P(B) is the probability of B occurring.

P(A│B) is the probability of A, given that B occurs, and P(B│A) is the probability of B, given that A occurs.

There are many good guides to Bayes’ Theorem on the internet, all we need to say here is that it is a very powerful tool and can be approximated to parts of brain function.

Here’s how.

Let’s say we take an initial idea about the world. This represents one of the brain’s models, and the brain is pretty sure about it, but not totally sure. Let’s put this idea on a very sexy graph:

Here’s a belief, or a prior.

That’s not a belief, you’ve just drawn a wave.

This is a way of modelling a belief. The idea is that we assume a prior distribution p(θ) over all possible world states. This is an idea about what we expect the world to look like, but it is inherently blurry, because we do not have perfectly precise information about the world.

There are two relevant types of chance here - one is the actual probability of the event, on the x-axis. The other is our perceived accuracy of that probability, on the y-axis. For instance, we could be 99% sure that something has a 50% probability of happening, like a coin landing tails (it could be a dodgy coin, or the coin could land on its rim). We very rarely get to 100% certainty about anything - a computer simulating flipping a coin won’t have the coin land on its rim, but it can crash, or run out of power, or contain malware that makes the simulated coin land on the rim, and these are all relevant outcomes.

So, there is some probability that our belief is true, and this probability is a combination of observable variables (from your senses) and non-observable variables (hidden states of the world that you do not have access to). This belief might be something like: I am in London, where camels do not generally reside, so I assign a low probability to seeing a camel.

New observations can then update your beliefs. You think you see a camel on the street, so your posterior belief then updates to reflect a combination of your prior belief and the new observation:

Your beliefs do not blindly update to the new observation, for good reason. Perhaps you have mistaken an oddly shaped lamppost for a camel. Perhaps you have seen an advert for camels to purchase, and due to your poor eyesight, your first glimpse of the advert gave it depth.

What happens next? You look more closely at the thing you thought was a camel, and realise that it is in fact, not a camel. In this case we would probably model this as totally resetting your belief to your original prior, but there would be plenty of cases where this would not occur.

The idea is that in neuronal populations, the brain is combining top-down predictions (no camels here) with bottom-up prediction errors (I’m seeing a camel, guv!) and using that to generate action sequences (look again at potential camel sighting, get ready to @LondonZoo on Twitter).

Does that all sound a bit unexciting?

Yes King Cnut, if that is your real name, if I don’t know what things are, I look at them more closely. This is what is revolutionising neuroscience? You guys really need to sort your shit out.

Sometimes when you use a new framework, it looks relatively unexciting, but then as you work through the implications, it allows you to explain some weird and wacky phenomena. This theory has a lot of cool implications!

Firstly, that process of updating your beliefs is critical. What if people were faster or slower in updating their beliefs? Well, then you might see behaviours that explain mental illnesses. For instance, most people don’t update their beliefs very much. Contrary to what every podcast about cognitive biases will tell you, this is a good thing! If you constantly updated your beliefs in response to new information, the world would be a terrifying place. This over-updating is thought to be a possible explanation for schizophrenia.

Schizophrenia is a mental disorder characterised by three main types of symptom; positive symptoms, negative symptoms, and cognitive symptoms. Positive symptoms are prominent in psychotic episodes, and involves severe disturbances in mental function - in the form of hallucinations and delusions. Negative symptoms refer to the absence of normal social functioning and interpersonal behaviours, such as the loss of ability to feel or express emotions. Cognitive symptoms, often considered the hardest to deal with due to their permanence, are defined by deficits in working memory and attention.

One of the predictions that follows from active inference is that your models, since they are interested in the interaction between your predictions and their errors, should downweight your own movements and actions. You are already aware of what you’re going to do, so you don’t need to factor that into the models as strongly. This is known as corollary discharge, or efference copy.

This means that people cannot judge the amount of force they apply as accurately as they think they can. As an idea, this is probably as interesting as science gets, because you can test this by getting people to hit things. If I get a robot to punch your chest - so we can precisely measure the force applied - and then ask you to match that force to the robots chest, the majority of people will hit the robot harder than the robot hit them.

They don’t actually get people to hit each other, sadly. They use a small device that you push, and it measure how hard you push. This is lame and we should do more fisticuff-based-neuroscience. Nonetheless, this is consistent with the idea that people are downweighting their own movements and actions.

If you take a second to imagine a fight between two people in a pub, where they both keep hitting each other with the same strength that they perceive they’ve been hit with, you can see why fights might escalate rapidly. I always think I’ve hit you less hard than you’ve hit me, so on my next punch I hit you harder in turn and you return the favour until neither of us have any teeth and we’re both calling our mothers in tears (I assume this is how all fights end).

Schizophrenic people perform much better on this task than non-schizophrenic people do. They are more accurately able to match the robot’s force. It’s thought that schizophrenics have poorer corollary discharge signalling, and so don’t downweight their own actions as effectively.

Have you ever noticed that you can’t tickle yourself? Corollary discharge is likely why. You are downweighting your own 'tickling input’. But schizophrenics are surprisingly good at tickling themselves, consistent again with the idea that their own inputs are being mischaracterised in an internal model.

But the model is better than that, because it goes on to provide an effective explanation for the positive symptoms of schizophrenia. For instance, some schizophrenic people hear voices that are not really there.

Imagine that I failed to generate an accurate prediction of my inner speech. This would induce large amplitude prediction errors over the consequences of that speech. Now, these large amplitude prediction errors themselves provide evidence that it could not have been me speaking; otherwise, they would have been resolved by my accurate predictions. Therefore, the only plausible hypothesis that accounts for these prediction errors is that someone else was speaking.

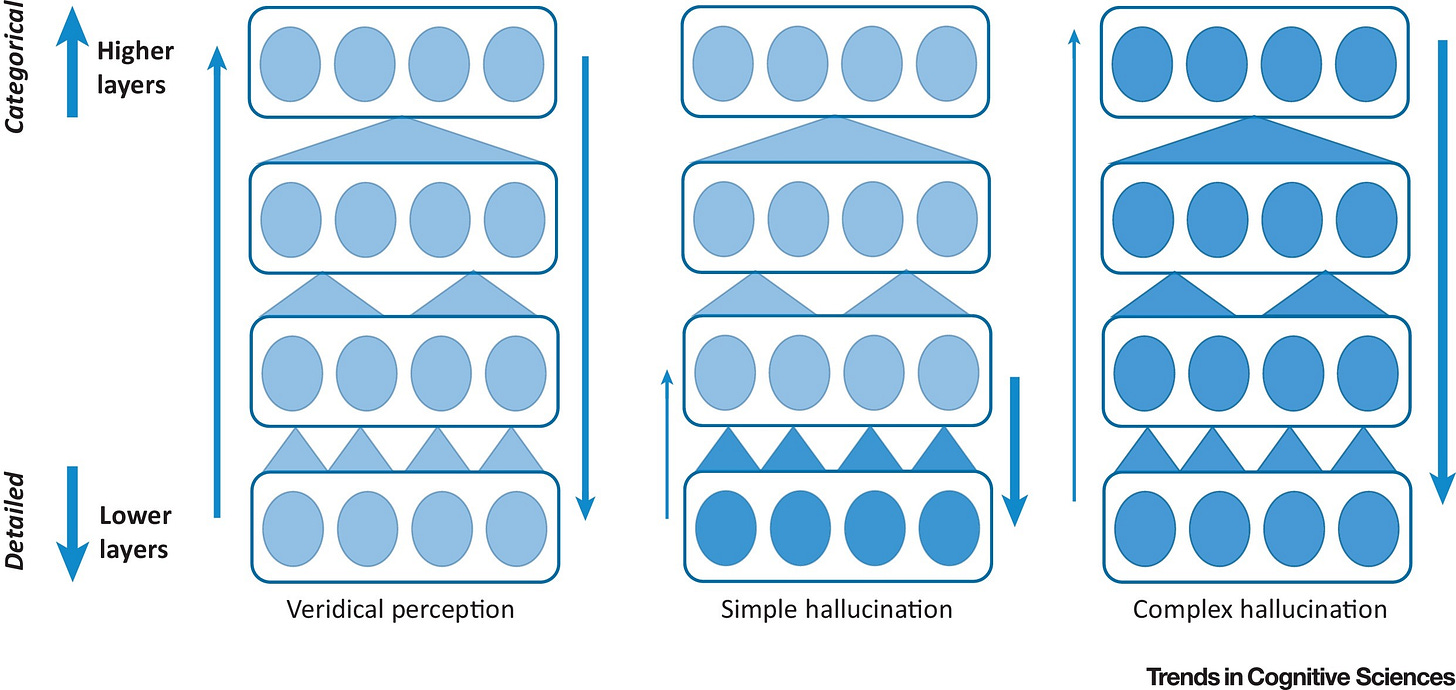

The problem might lie somewhere in the nexus of predictions and prediction errors, where errors at different levels of the cortical hierarchy create different emergent phenomena - simple hallucinations versus complex hallucinations.

The diagram below tries to convey the different layers of the cortical hierarchy. On the left, bottom-up and top-down signals are balanced, whereas on the right, the brain’s strong priors about what it expects to see are totally overriding error signals, generating hallucinations.

Let’s think about action and perception. In folk-psychological ideas of how the mind works, these two concepts are different. You perceive the world, think about it, and then decide how to act upon it. Here’s a fun little model I’ve just made to represent this:

Pretty good, right? My graphic design skills are getting better every day. But this model has a lot of flaws. Firstly, a lot of the time we act without perceiving or thinking about our actions at all. Secondly, the bubble of ‘thought’ is not really a helpful concept; what are these thoughts? Are these ideas stored in the working memory? Or remembered states that we can activate at any time? What about times when we need to modulate both action and perception almost simultaneously, such as when a task needs a lot of precision?

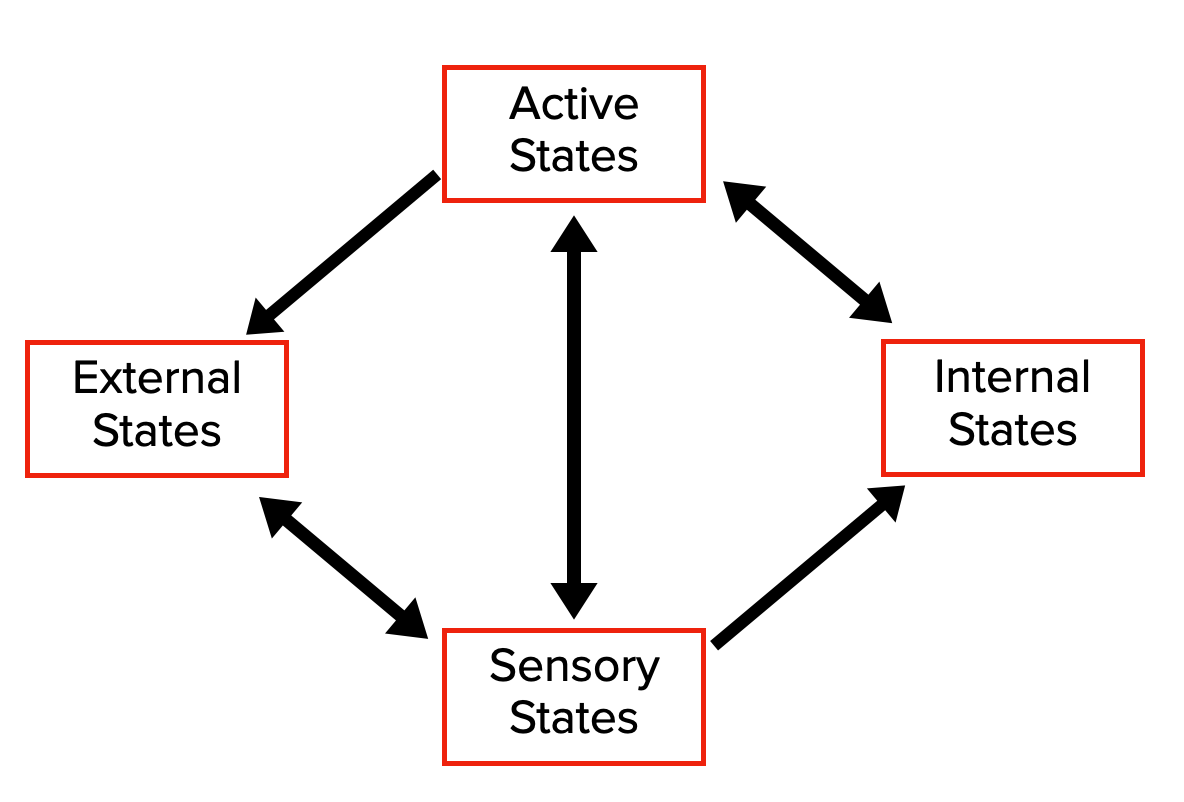

The free energy principle operates with a different model of the world, where the environment is separated from the internal states of the brain by a boundary. Here’s what that looks like:

The two boxes of active states and sensory states act as a dividing bridge between the internal and the external world. This means that action and perception are unified.

Woah, man. But wait. How exactly does that mean action and perception are unified…?

Let’s rewind to our Bayesian models. When we’re updating our beliefs, we’re essentially trying to match the predictions that we make with the outside world, or to minimise the data we receive about prediction errors.

There are two ways to do that: the first is to change our internal states, so that we better reflect the external states of the world. This is changing perception.

The second is to change the external states of the world, so that they better reflect our internal states. This is action.

All of our predictions usually have a level of precision attached to them, which reflects how certain we are about that particular prediction. Perception and action then become functions of precision - we interact with the world in such a way as to maximise the precision of predictions. So, when you looked at the camel more closely, that was maximising your precision about whether you saw a camel.

Where’s your evidence for this in the brain tho bro?

In the visual system, there are about twenty times as many connections which project backwards, from V1 in the visual cortex (the simplest part of the visual cortex) to the lateral geniculate nucleus (the area before V1 in visual processing), as there are connections which project forwards. Backwards connections are thought to be modulatory, and involved in issuing predictions, while forward connections convey prediction errors.

If the brain merely took in information from the outside world, processed it, and then acted upon it, this mismatch doesn’t make any sense. Instead, this discrepancy is more consistent with the idea that the brain has a model of the outside world, and then mostly relies on visual data to check that model.

All of this explains why you might see the world in an imperfect or biased way. The models aren’t there to accurately represent the outside world. They’re there to give you what Giovanni Pezzulo calls affordances, or opportunities for action. In his work, he suggests that there also exist hierarchies of action, where the brain makes predictions based on the expected rewards of different action paths, and different layers of the cortical hierarchy operate to realise those rewards. Planning, and the future, are incorporated into the active inference model.

This is a pretty good way of modelling human behaviour, as models go. It is useful for explaining how basic motor actions work, as well as why it’s hard to change someone’s mind - people often have strong priors about their basic beliefs as a defense mechanism.

Whew. I’m going to stop here for now. There’s a LOT more to this idea: The Dark Room problem! Ashby and Conant’s Good Regulator theorem! Self-evidencing! Notions of Surprise! Enough Markov blankets to warm the Snow Queen’s heart! Kubler-Leiback Divergence! And many more things I do not understand! All that coming up next time on King Cnut. For now, here’s the weather.

But seriously, I hope this acts as a useful primer. Slowly understanding this theory has changed the way I think about the world, and I think it will continue to do so as I understand it better.

For those of you who are very interested and can’t wait for me to try and unravel all the threads:

The best introductory book to my mind (pun intended) is Being You by Anil Seth, which covers a lot of the key areas, and is relatively accessible. It’s not strictly about this topic - he also discusses consciousness and interoception and other parts of brain function.

Surfing Uncertainty by Andy Clark is an in-depth review of the topic, and is hard-going but is specifically on active inference. There’s a good review of it by Scott Alexander.

If you want to jump in at the deep end, and you are familiar with advanced statistical physics, you can try Active Inference, by Karl Friston, Thomas Parr and Thomas Pezzulo. I do not recommend this, that book hurts my head. It is all free online though.

I wish I'd come across this a couple of months ago! I read the SSC Surfing Uncertainty review a couple of years back, and started Surfing Uncertainty earlier this year. I found the writing in SU unneccessarily complicated, and was close to aborting it a couple of times, despite how fascinating I found the whole topic. But my biggest gripe with SU was how light it was on technical details. But maybe that's just how it is.

So thanks for writing this! While I would usually send people in the direction of the SSC review, I think now this will be another in the repertoire for introducing people to active inference/predictive processing, and the related theories.