This is the second part of my review essay of How We Learn by Stanislas Dehaene. It’s somewhat shorter, because people keep telling me I write long things. There’s three central themes in this post; the manipulation of memory, phonics, and the neuronal recycling thesis.

MEMORY MANIPULATION

Mice freeze when they are afraid. Usually if animals have been afraid in one place, when they re-enter that place they become afraid again. Researchers found that if you trigger a specific set of granule cells in the dentate gyrus in the hippocampus, you can make mice remember times when they were afraid. Here’s what they did:

Put mice in nice, safe, Location X. Let them chill out, learn the space, and feel the vibe.

Put mice into Location Y. Start a fear conditioning process, whereby you shock their feet. While you do this, activate the granule cells that encode for Location X.

Put the mice back into the nice, safe, Location X. The mice start freezing. Lo and behold, the mice are now afraid of Location X, despite the fact Location X never did anything to them and actually had great vibes.

You can then make mice feel better about themselves by activating place cells that were active when they felt good. How do you know when a mouse feels good? In science speak, male mice feel good when they get “exposure to a female mouse in a modified homecage”. In regular people speak, male mice feel good when they’re getting their fuck on. You record those place cells, and then activate them again later on. If you do this, mice score lower on metrics measuring depression.

How do we know when mice are depressed? One method uses the Tail Suspension Test. You hold a mouse by its tail for six minutes, and see what percentage of that time it spends struggling. The longer it spends struggling, the less depressed it is. If you give mice things that usually make humans feel less depressed, they usually spend longer struggling, so this metric should be taken seriously, even if it does involve sticking tape to mice tails and watching them with a furrowed brow.

Why is this interesting? Activating the place cells where they had positive experiences seems to have immediate effects on mouse depression. When we give people anti-depressants, there’s usually a long period of waiting around - the NHS website suggests that you need to wait at least 1-2 weeks for an effect. If you could activate positive experiences in a human brain to immediately remediate depression, that would be a huge breakthrough.

Similarly, Kim and Cho (2017) show that you can lessen fear responses by weakening synapses that produce them, while de Lavilléon et al. (2015) show that you can incentivise mouse behaviour by injecting them with dopamine while they sleep. Basically, while they sleep, most mammals activate place cells of places they’ve been during the day. This is very cool, and you can watch the sleeping mice remap the spatial environments they explored during the day via the activity of these cells.

If you inject dopamine while the mouse is virtually ‘at’ a certain place, you can mess with their reward functions, and get them to associate a specific place with a massive reward. The mice will wake up and sprint to the location they were at in their mouse dreams when you swamped them with dopamine.

It seems like it should be possible to do this in humans, because we also use grid and place cells to navigate, and also replay events during sleep.

SOUNDING IT OUT

By the time of your first birthday, your ability to distinguish phonemes is essentially locked in place. The blocks of sound that make up your native language(s) locked in, and it takes immense effort to undo the ossification of these phonemes. You will have heard the effects of this when other people speak English. You can listen to Munenori Kawasaki talk if you want to remind yourself:

In Japanese, the /R/ and /L/ phonemes are not differentiated. When a Japanese speaker like Kawasaki says ‘English’, it can come out as ‘Engrish’. Native English speakers are not immune from this. Here is the word for ‘mask’ in Korean:

And here’s the word for ‘daughter’:

To me, they sound pretty much identical, although the beginning of the word ‘daughter’ is somewhat clipped. If I heard these two words in a sentence, spoken at the pace of a native speaker, I’d have no chance. I definitely couldn’t produce them correctly.

The difference emerges because one word is aspirated and the other isn’t, which affects the pronounciation. It may be hard to hear this because I cannot speak Korean and had to get a Google Language robot to read it. Ask your Korean friends. If you don’t have any Korean friends, get some.

If you’re curious about aspiration, it’s do with the way that your mouth forms words, and you can watch it in action! If you’ve read this far you probably speak some English, so grab a napkin and hold it in front of your mouth. Say the word ‘spin’. The napkin should remain static. Then say the word ‘pin’. The napkin should go nuts. I believe something like this is happening with ‘mask’ and ‘daughter’.

There are plenty of phoneme pairs like this that you could have learned, that your brain was primed to learn, but that it did not learn. You learn the phonemes that are useful for your native language, and you specialise fast.

Anyone desperate to learn a tonal language might be panicking right now. But it’s okay. You’re not locked in. You can learn these sounds now - techniques include amplifying the difference between the phonemes and then gradually reducing the amplification - but it’s just harder now than it would have been when you were 0.5 years old.

The adult brain remains much more plastic than many people allow for. Wong et al. got people to try and improve their ability to detect pitch, and many adults can learn to do this:

Absolute pitch (AP) refers to labelling individual pitches in the absence of external reference. A widely endorsed theory regards AP as a privileged ability enjoyed by selected few with rare genetic makeup and musical training starting in early childhood. However, recent evidence showed that even adults can learn AP, and some can attain a performance level comparable to natural AP possessors.

The existence of complicated aspirated sounds versus regular sounds and other sounds is partly why some languages are easy to learn, like Italian, where the entire language is very regular and all the vowels always sound the same, and some are very hard to learn, like English, which by all accounts is very irregular and a nightmare to pick up. We chose a super bad global language, and as a world community, dovremmo parlare italiano.

Importantly, unless some language is learnt in this critical early period, problems arise. If deaf children don’t receive cochlear implants by the age of eight months, they already have permanent deficits in syntax. Sign languages, in case you are wondering, are full languages and count as languages. If deaf children don’t get cochlear implants and they don’t learn a sign language, they’re in trouble for the rest of their lives.

Dehaene:

Those children never fully understand sentences where certain elements are moved around, a phenomenon known as “syntactic movement”. In the sentence, “Show me the girl that the grandmother combs”, it is not obvious that the first noun phrase, “the girl”, is actually the object of the verb “combs” and not its subject. When deaf children receive cochlear implants after the age of one or two years, they remain unable to understand such sentences.

New York recently changed their school curriculum from one based on ‘Balanced Literacy’, which emphasised looking at the whole words and utilising pictures to understand words when you didn’t understand the meaning, to one emphasising phonics, or the individual sounds of words. The second method works much better for teaching schoolchildren to read.

Dehaene then mentions a possible way around this. These circuits seem to close themselves because some neurons contain a protein called parvalbumin. This protein causes these neurons to progressively surround themselves with a hard matrix, which locks synapses in place. If we could release neurons from this rigid net, synaptic plasticity may return. And we can, with fluoxetine or Prozac, which presents exciting possibilities for attacking psychiatric disorders.

Although it is traditionally associated with muscle function, acetylcholine is one of the primary neurotransmitters of the human nervous system, contributes to a lot of important areas, and does far too many things to list here. Another protein, known as “Lynx1”, when present in a neuron, inhibits the massive effects of acetylcholine on synaptic plasticity. Inhibiting Lynx1 in turn seems to have promising effects for restoring the effects of acetylcholine in adults.

What does all that mean? Well, mice without the Lynx1 protein recover much faster from problems with lazy eyes. You probably want Lynx1 if your vision is fine - why allow something that works to be altered? - but inhibiting Lynx1 could allow the restoration of vision in others.

You may have heard of transcranial direct current stimulation (tDCS), where you fire a weak current across brain regions, in order to make certain brain areas more or less active, depending on what you’re trying to do. Yes, this is safe. You can apply tDCS to the brains of macaques in order to improve their performance on an associative learning task. If you did this to humans, their performance would also likely improve on an associative learning task.

Should we do this to humans? Dehaene talks excitedly about its prospects for rescuing people trapped in a deep depression. If it does get used for this, other people will almost certainly try and use it as a hacky way to enhance their brain power. Plus ça change. Will this work? I’m unsure. We’re on the edge here, folks.

NEURONAL RECYCLING BINS

Dehaene gets most excited about his Neuronal Recycling thesis. The name is more elegant in French - recyclage holds the dual connotation of recycling materials and also retraining later in life - but the idea is simple.

As I mentioned above, your cortex is not plastic at a fundamental level. We are limited by the existing circuitry that we have in our brains, which came from an evolutionary hodgepodge that optimised partly for survival. This means that any modern tasks often end up constrained by older circuitry in strange ways.

For instance, complex mathematics was not particularly useful in the wild, so we didn’t really develop specialised circuitry to do algebra. This means we rely on older circuits for most of our mathematical prowess. Various groups of creatures, like monkeys and ravens, can subitise (assess how many things are in a group), and determine whether some groups have more objects in them than others.

These circuits were fairly limited - activating the number ‘5’ in cortical circuits doesn’t exclusively activate ‘5’, it also activates neural weights in the ‘4’ and ‘6’ bins.

This means its much easier for you to count the difference between these two sets of Britneys:

Than between these two sets of Britneys:

The difference isn’t that great. You can still do it. It just takes longer, because of that neural overlap. That the second task should take longer might seem obvious to you, but there’s no inherent reason why it should be this way.

This happens for subtraction as well: 9-8 takes longer than 9-2.

We have a mental number line that we use to navigate numbers, and this affects the way we perceive all numbers. Things are more precise at the low end, and less precise as the numbers get higher. This is why during negotiations, people will quickly give up thousands of pounds when dealing with house sales, and then haggle on eBay for a second hand copy of Super Mario game that costs $5.

Why do numbers get binned in this way? Why have overlap between the neural bin for ‘4’ and the neural bin for ‘5’? Most of the work I’ve read in this space - this is a useful summary of multiple papers - doesn’t really seem to offer an obvious answer, although there may be one out there that I’m just missing. There are numerous (haha) possibilities that spring to mind:

It’s a hang-up of a previous system. The initial way that brains learned to process numbers just needed to know ‘less than’, ‘more than’ or ‘equal to’, instead of precise estimates of quantities. “Should I forage in that bush or this bush?” is a question answered by quickly subitising the number of visible berries in the bush, as opposed to precisely calculating them. Since our brains build on this initial system, they still retain architecture that works in that way.

It evolved to allow for uncertainty. This is similar to the previous idea, but suggests that counting neurons evolved that way intentionally because animals are exposed to a lot of unreliable information. If you see some number of shadows in a blizzard, it’s best not to think too hard about how many polar bears are bearing down on you and just whether your tribe can fight them.

It facilitates sums. Having neurons activate around the current represented number may make it easier to perform mental operations on those numbers, and you’re usually working with numbers that are closer to each other than you are with numbers that are far apart.

I don’t know much about Your Brain on Numerosity, and those ideas above are all speculation, so take them with a pinch of salt.

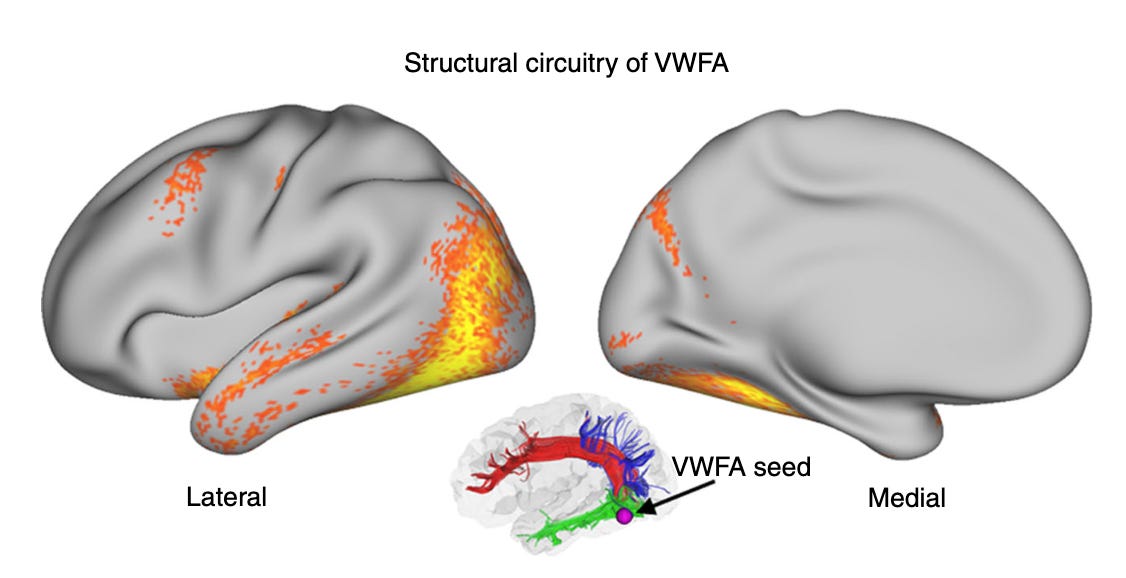

Reading similarly recycles circuitry, this time a visual circuit that allows children to recognise and name objects, animals and people. The acquisition of literacy develops a region of the visual cortex that Dehaene calls the “visual word form area” or VWFA.

This may shock you, but there is a lot more activity in response to text in the brains of people who can read than in the brains of people who cannot read. Remember that a lot of brain areas are organised hierarchically - areas lower in the hierarchy code for simpler elements of stimuli, while higher areas code for more complex elements of stimuli. In the literate brain, early visual areas light up more in response to small print. But in illiterate brains, early visual areas respond more intensely to faces.

What’s happening?

Much of the visual cortex is unspecialised at birth, and we gradually fill it out with things that we want to see. Facial recognition seems to develop organically in that specific area, unless you learn to read, in which case language bodychecks any future development of facial processing, and shunts it over to the right hemisphere. This effect is so strong that computer algorithms can detect whether a child can read simply by the response in particular areas of the brain to faces.

Here’s a lovely representation of this from this paper:

THE INVARIANCE OF LEARNING?

There’s one more element of How We Learn that I’d like to flag, if not discuss in great depth, just because I thought the idea was interesting. Dehaene believes that every learner learns in the same way.

This is partly a refutation of the VARK learning styles idea (visual, auditory, reading, kinaesthetic strategies), which has been superseded. Any learning which takes place across more than one of those styles of learning will be more effective than learning which takes place in only a single domain.

But he also suggests that people with learning disabilities, such as dyslexia, learn in a fundamentally similar way to people without learning disabilities. The only differences in learning, for Dehaene, rely on prior knowledge, speed of uptake and attentional patterns. These are quantitative differences, not qualitative ones. Dyslexics may need written material presented more slowly, but not in a fundamentally different way to those who do not have dyslexia.

Part of the reason this post took so long was because I tried to look into this, and the material got very complicated immediately (other reasons include laziness, and distraction). If you’re really interested in this idea, I recommend this article, which discusses types of dyslexia and offers more useful commentary than I can. But the idea that all learning takes place in a similar way is interesting, even if I can’t ascertain whether it is generally accepted.

Anyway, I need to move on from How To Learn now, but I hope these ideas are useful in thinking about learning.

SOME STUFF I ENJOYED RECENTLY

Left-handers are on average more violent because holding weapons weirdly is an advantage for fighting, provided not too many people are left-handed.

Nice interview about attention with Gloria Mark. David Epstein is very friendly and always answers questions, so I’d recommend his blog.

Been reading about Georgism, and it’s compelling as an idea. Good places to start include Azad and Bram in Wired, and Lars Doucet’s Progress and Poverty book review.

If you’re looking for some new music, I enjoyed Lil Ugly Mane’s new album very much: