How should a brain learn?

In which we tackle the complementary learning systems theory

In the 1980s, scientists were playing around with small models of neurons, trying to get them to simulate parts of human behaviour, such as perception or memory.

In those halcyon days, neural networks were so small that you could see all of the parameters of your neural network, airplane security was someone wishing you g-day and the vape did not exist. In 1989, McCloskey and Cohen released a now landmark paper, which is very much the Elton John of the ‘forgetting in neural networks literature’.

They were training a neural network to solve problems, and they started with basic addition. First, they trained their network on ‘ones’ problems. These are tough problems such as 1+1, 1+2, 2+1, 1+3… and so on. There are 17 of these in total, if you leave out 1+0 and 0+1. The network managed to learn to be able to solve these problems, just as you did when you were five.

Then they wanted to improve their neural network. So they trained the initial network, which had shown its budding maths ability, on ‘twos’ facts (2+1, 2+2, must I go on?). If you’re smart, like a human, instead of stupid, like a 1989 neural network, you can work out quickly that there are 17 of these as well.

What happened? The machine completely forgot all the old ‘ones’ stuff, and started living in two-land. Here’s a chart:

In fact, any residual performance the network had on ones problems was only because the machine was just computing everything as if it was a two. Sometimes the way that the neural network processed data meant that if it was asked a ones problem, the answer to the twos problem it substituted in instead was pretty close, so it technically wouldn’t be that wrong. On a practical level this meant that it was very wrong.

Imagine its the weekend, and you decide to train a neural network on cats, because you want to have a ripper weekend. You show it lots of pictures of cats, and you say, ‘This is a cat!’

It extracts some set of information corresponding to what-a-cat-is-like, and then when you show it a new picture of a cat, it says, ‘Quello è un gatto!’ You change the language setting from where you’d accidentally set it to Italian, and show it a picture of a dog. The machine says: ‘Quello non è un gatto!’

You make a note to fix the language setting later. But your machine now knows two things: (1) what is cats (2) what isn’t cats. Importantly, it doesn’t know (3) what is dogs. It just knows they’re not cats.

But wouldn’t it be cool if it could detect dogs? So after you fix the language module, you give it a bunch of pictures of dogs, and it extracts some set of information corresponding to what-a-dog-is-like, and then when you show it a new picture of a dog, it says ‘It’s a dog!’ Then you show it a picture of a cat, and it says, ‘It’s a dog!’

For your neural network, everything is a dog, or not a dog. But it doesn’t know what cats are anymore.

The problem is that when neural networks train on information, they form a representation of the data, and it wires of all its weights to reflect that. The term weights here describes the strength of different connections in the network, which combine to produce an output.

If you then add in newer information at a later stage, the model has to rewire itself to reflect this new data, and it often does so by changing all of its weights, including the ones that were detecting cats. The performance goes down drastically.

This is known as dogastrophic catastrophic forgetting.

Catastrophic forgetting is the main issue for computational systems that want to engage in continual learning. This might sound complicated, but it isn’t. It’s what we do every day - learning from new information in the environment. When we build neural networks, we want them to be able to keep learning from the environment. But if we train models on a bunch of data, and then add in new data, catastrophic forgetting can and will occur.

To Solve The Problem, Get More Learning Systems

But aren’t neural networks based on our own brains? Why doesn’t that happen to us? Why is it that every time we see a new dog we don’t immediately forget everything we knew about cats (paging Mitch Hedberg)?

It sounds kind of stupid when you put it that way.

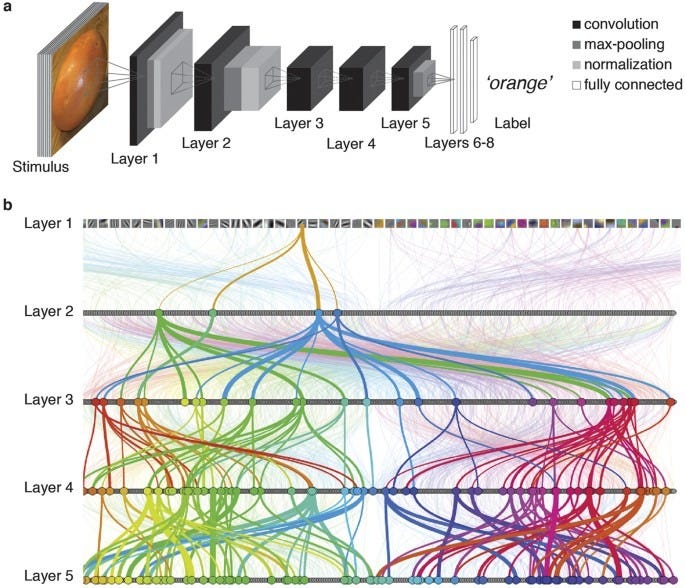

Firstly, its useful to mention here that if you expand your neural network so it looks like this:

Instead of like this:

This problem is reduced significantly.

But it still occurs, and large language models (LLMs) and algorithms (you know, from the news) all have to find a way to solve this problem.

Humans solve this problem by having two learning systems.

The first is the hippocampus. If you’ve ever been interested in the mechanics of memory, you’ll have come across this region. Although its often described as the key memory storage unit, it actually does something a little more complicated - it encodes new memories, and then moves them off-site for storage, much as all the major copyright libraries do when they get 700 editions of the latest Lee Child novel.

The second is the neocortex, which is basically most of the bit people think of when they think of a brain.

Here’s a picture of the neocortex:

Hmm. Not sure how that got in there. Here’s an actual picture of the brain:

All the wrinkly bit on top is neocortex.

Initially, scientists operated on a hippocampus = FAST, neocortex = SLOW principle. The argument ran that the hippocampus would rapidly work to encode episodic memories as they unfolded in front of you.1 These would then be slowly transferred to the neocortex over a period of time, often during sleep.

But the neocortex isn’t just moving the hippocampus’ output to its own labyrinthine corridors. It’s looking for patterns and extracting regularities from the environment, and changing the data in order to match that. Some interesting experiences are stored (Anita drank all the milk and didn’t replace it). But most experiences are re-rendered as statistical generalities (Anita is a selfish cow).

This is why episodic and semantic information end up in different parts of the brain — you can read my earlier post for more on this:

Learning Is Slow For A Reason

New information from the hippocampus is mixed with pre-existing knowledge in the neocortex, in order to avoid the problem of catastrophic interference.2

This is partly why most learning feels slow. If it felt quick, you would probably be changing the parameters of your brain’s knowledge too much, which would destabilise the entire structure.

In the same way, if we train our neural network above on cats and dogs at the same time, in an interleaved manner, catastrophic forgetting doesn’t occur. Learning these things sequentially causes the problem.3

But we have to keep learning over our lifetimes, because that is biologically useful. You cannot simply design a brain or a neural network and then expect that to be able to survive in a changing and hostile world. This was the problem of the very first neural network, designed by Walter Pitts and Warren McCulloch back in 1943 - it couldn’t learn from experience.

Even very simple animals like fruit flies (or Drosophila) can learn from experiences. Here’s how Christopher Summerfield puts it in These Strange New Minds:

The strength of connections in the fruit-fly brain can adapt. In fact, like almost every other animal on Earth, Drosophila is able to learn - to adjust its behaviour with experience. This has been known since at least the 1970s, with early studies in which Drosophila were given the choice of flying down a blue or a yellow tunnel, and received an electric shock if they made the wrong choice (they rapidly learned to choose the unshocked route). Fruit flies can be trained to prefer different odours (such as ethanol or banana oil), to avoid different zones in their training chamber because they are often unpleasantly hot, or to veer in one direction or another in response to images of differently coloured shapes. They can even be trained to prefer one potential mate over another on the basis of their eye colour, by teaching male flies that red-eyed females tend be more sexually receptive, whereas brown-eyed ones are generally less game.

As far as I’m aware, scientists are yet to test whether the last effect can be reversed by playing van Morrison’s Brown Compound-Eyed Girl.

Your neocortex contains billions of neurons, with billions of connections between them. The optimal rigging of these neurons is not fixed - much like the sail on a yacht has no true optimal configuration. Different knots and reefs and angles can all help wrest certain behaviour out of the boat. Maybe you want it to go fast, or slow, or to catch a choppy easterly. The optimality of any of those choices comes from your surroundings.

I’m using the sailing analogy to try to capture the importance of the previous configuration of connections. When we learn new things, the optimal adjustment of our connections based on this new experience is highly contingent on the previous layout of our brains. Experience matters here - children are likely to update these connection maps more rapidly than adults, even if the signals coming in are noisy or weak - because new information is more valuable if you have less old information.

The relationship between these hippocampus and neocortex is complicated, and in this complication a great variety of fascinating symptoms appear.

Your memory is trying to learn statistical regularities in the environment, in order to generate predictions that inform behaviour. Usually, learning should be gradual. But there are occasions where it should be fast - learning about certain events should be privileged.

If you’re a child learning about cats, you can take your time learning about the general category of cats, deciding which animals fit into it (siamese, maine coons and exotic shorthairs) and which don’t (dogs, bees, giraffes).

But if you’re a child and a cat jumps onto your head, claws out, you want that piece of learning to one-shot across your brain with a signal saying, NEVER AGAIN.

So the hippocampus can’t just feed information into the neocortex and say, here’s a bunch of nonsense, go make sense of it, as I used to do to my teachers when I handed in homework. It must have ways of marking specific experiences as important, and worth learning from, rather than just another piece of a complicated puzzle.4

Build Some Schemas

Another side to this is that the schemas built in the neocortex matter. If new knowledge is consistent with previous schemas of knowledge, then it is incorporated into the neocortex much faster.

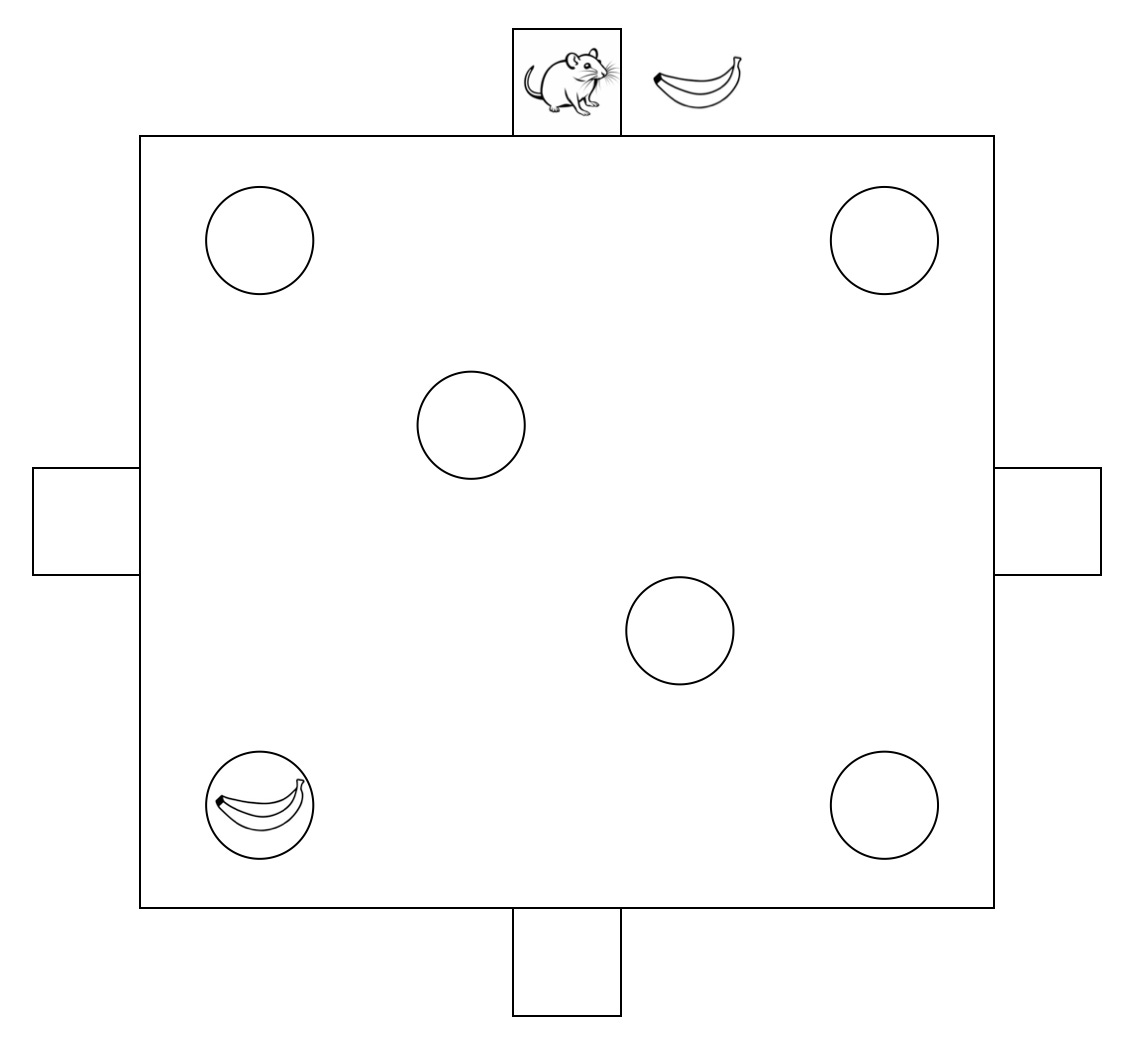

In one study, rats were trained to forage for food by linking a flavour with a location. So you start a rat in a box, and give it a flavoured snack, i.e. a banana flavoured snack. If it then goes to a specific banana-hole in the arena and digs there, it receives more food. Sometimes the rats would dig in the wrong holes, and they wouldn’t get food. Over time, the rat learns to link each flavour to a hole. But this takes a while, as the rat has to learn the rules of the flavour-hole game.

You then run the experiment again, a few days later, leaving the rats some time to transfer those memories into their neocortical areas. Now you add in new flavours. The rats learn these new flavour-hole pairings much more quickly, and accurately, because they have learned the ‘flavour-hole’ schema.

One clear takeaway is that knowledge is quicker to obtain if it is built into things that you already know about. Understanding the rules of the specific game you are playing matters, because it will help you integrate new knowledge.

There’s a meta-game happening here too, as any schema you learn will have imperfections. We must continually learn new schemas and update our old ones, and this will take time while this updating occurs in the neocortex. Breaking down information or skill-learning in this way is important, as information will be retained better.

Something To Think About

The people who design LLMs are obviously aware of catastrophic forgetting. But it isn’t a solved problem - different architectures of machine learning models use various techniques to navigate around it, which I’m not going to go into here.

Here’s a quote from the much discussed AI-2027 paper, released in April this year, which explored a future world with high AI takeoff speeds:

They train Agent-2 almost continuously using reinforcement learning on an ever-expanding suite of diverse difficult tasks: lots of video games, lots of coding challenges, lots of research tasks. Agent-2, more so than previous models, is effectively “online learning,” in that it’s built to never really finish training. Every day, the weights get updated to the latest version, trained on more data generated by the previous version the previous day.

The critical line is this: “Agent-2, more so than previous models, is effectively “online learning”. Now, there are many people working on improving the quality of continual learning. If you’re interested, this a great summary paper from a few years ago that actually inspired this post.

However, in the short-run, most algorithms are going to have to deal with more stringent trade-offs, and this is worth bearing in mind when reading about the new capabilities of AI models. This is especially true when thinking about say, robots, who cannot hide from new information in the same way LLMs can. Robots have to interact with the world and then react to it, whereas an LLM can just tell you that its training cutoff date was January 2022 and that Queen Elizabeth is looking forward to attending the 2025 Women’s Euros and nothing bad happens to it.

My guess is that some of these timelines are not accounting for such limitations, which may slow them down, especially ones such as AI-2027 that are probably overoptimistic. From my understanding of the structure of deep-learning algorithms, we might also see models that are good at different things being strung together in order to overcome such problems.

This means if we get to AGI, it might look less like a unitary all-powerful deity and instead like a multi-headed octopus with wacky limbs.5 But what do I know?

The way that encoding occurs in the hippocampus is via a complex and interesting process called sparse conjunctive coding, which I’m planning to break down in a future blog. The hippocampus also engages in something called generative replay, where it will replay information to the neocortex in order to ossify that knowledge.

Specifically, the hippocampus interleaves knowledge into the neocortex. From Kumaran et al. (2016): “According to the theory, the hippocampal representation formed in learning an event affords a way of allowing gradual integration of knowledge of the event into neocortical knowledge structures. This can occur if the hippocampal representation can reactivate or replay the contents of the new experience back to the neocortex, interleaved with replay and/or ongoing exposure to other experiences.”

Specifically, it’s because we are violating the assumption that our data is independent and identically distributed.

I won’t go into single-shot learning here, but here’s a wonderful elaboration if you’d like to know more.

We also might not see under the hood in this way. Even a multi-modular AGI might look unitary to an external observer.